Last Modified on August 17, 2023

Do you want to use BigQuery but are unfamiliar with SQL?

Today, BigQuery is impossible to ignore, and everyone says you should use it with Google Analytics 4. However, many people refrain from using this tool upon learning that they need to learn SQL programming.

Here comes ChatGPT to save the day, allowing you to benefit from its powerful analytics capabilities even with zero SQL knowledge. In this guide, we’ll teach you how to use BigQuery with ChatGPT.

To run queries in BigQuery, we will need to navigate its interface. It can look scary but do not fear, as we will go through it thoroughly.

Here is an overview of what we’ll cover:

- Running Queries in BigQuery

- Creating a BigQuery Project

- Accessing BigQuery Public Datasets

- Managing Costs

- Retrieving Data from BigQuery with ChatGPT

- Retrieving Specific Columns & Rows

- Filter Records

- SQL Dates

- Retrieving GA4 Event Parameters

- Unneseting Fields (Getting GA4 Event Parameters)

Let’s dive in!

Running Queries in BigQuery

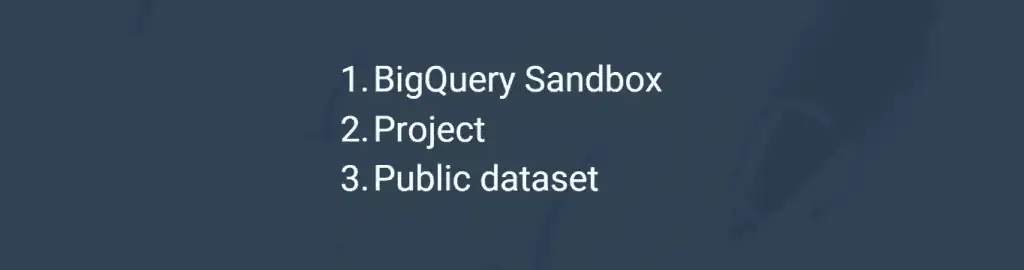

To run queries, we need the following three things:

First, we need a BigQuery sandbox account so you can play with data for free. No credit card or billing is required unless you decide to upgrade. Next, we need a project which is a place where BigQuery data is stored. Lastly, we need to access a dataset.

First, log in to your Google account, and let’s begin!

Go to your search engine and search for google cloud console. Scroll down and select the Google Cloud Platform.

You can also select Google Cloud console – Web UI Admin.

Alternatively, you can go to Google Cloud directly by typing console.cloud.google.com on the address bar.

If you’re an existing user, you will see a page like the one below where you will see the project you’re working on and the different projects you can access.

For new users, select your country, agree to the terms of service, and click Agree and Continue.

We have reached the homepage.

Creating a BigQuery Project

There are two ways of creating a new BigQuery project.

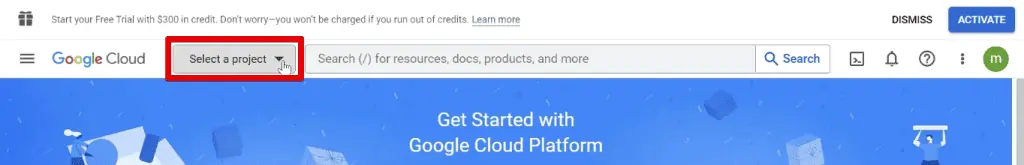

The first way is to click Select a Project.

Next, click New Project.

We prefer to go to the BigQuery workspace and create a project there.

Open the navigation menu, scroll down, and click BigQuery.

We are now in the SQL workspace. Click Create Project.

Provide a name for the project. If you want to change the project ID, click Edit. Finally, click Create.

We will see a welcome screen. Click Done.

We now have our BigQuery project in the sandbox account.

Accessing BigQuery Public Datasets

There are multiple ways to access public BigQuery datasets.

To achieve this quickly, go to the BigQuery sample dataset for GA4. Click the ga4_obfuscated_sample_ecommerce dataset.

This link opens the table with the data we need to query.

To do the same in the SQL workspace, go to the search bar and type public. Initially, we will see 0 results. Click Search All Projects to broaden our search.

Expand the bigquery-public-data and click Show More.

Scroll down and select the ga4_obfuscated_sample_ecommerce dataset.

Now, clicking on the dataset is not where you will see your data. To understand this more clearly, note that the first level pertains to a project. Within your projects, you have the datasets.

Selecting your datasets will only give you information about the dataset. To find your data, expand your dataset, and you’ll find it below.

For example, expand our dataset. Select the table called events.

A great document of reference is the GA4 BigQuery Export Schema.

This document is not mandatory, but it is nice to have to help understand how GA4 data is structured in BigQuery, the names of the columns, and the data types. You can know what’s available and what to use with ChatGPT.

We are using a free account, but we will still cover some best practices for avoiding or mitigating costs when dealing with company data.

Managing Costs

First, go to Details and scroll below to see the dataset size. Since we are still dealing with megabytes, we are still safe.

If you see data above the gigabytes or terabytes size, be prepared to pay some money.

Next, go to the table info section and copy the table ID.

Next, click Query → In New Tab.

To quickly demonstrate how to load query data, let’s try the following code:

SELECT *

FROM [your-table-id]The above code lets us retrieve all the data available in the table. Paste this code and pay attention to the message at the right.

First, we have a green check mark indicating the query is valid. The message beside this lets us know if there are any costs involved.

Because we are dealing with megabytes, we are still within the free tier. If the query size is above gigabytes or terabytes, you have to make edits to your query and make it fall back to the free tier.

After trimming down the query, if the size is still a gigabyte or more, only query the data you need to minimize costs. Remove all unnecessary rows or columns.

At this point, ChatGPT is extremely useful if you have limited knowledge of SQL or if you want to simplify the query writing process.

Retrieving Data from BigQuery with ChatGPT

Now, let’s go to ChatGPT.

Ask it to retrieve everything from our BigQuery table.

ChatGPT will write a code for us and provide a quick explanation below. Click Copy code.

Paste the code back to BigQuery and click Run.

After running the code, we can see the query results at the bottom.

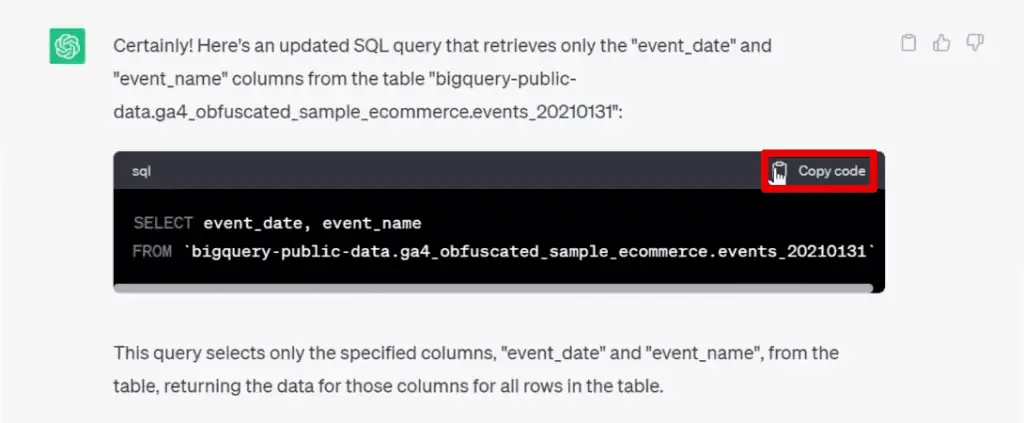

Now, let’s crank it up a little. Let’s say we want to retrieve only the event_date and event_name columns. How should we do this?

Retrieving Specific Columns & Rows

Again, we can use ChatGPT to simplify this process.

A great thing about ChatGPT is that each search is not isolated. It can answer any follow-up questions and keep the context of our search history.

We can ask it to retrieve the event_date and event_name columns. It will gather the table ID from our previous message.

Copy the updated SQL query.

Paste this code back to BigQuery and Run the code. Expand the query result.

We can now verify that we are only retrieving the event_date and event_name columns.

While we have cut down our columns, we still have all the rows in our table. Looking at the lower-right portion of the window, you’ll see that we have close to 27,000 rows.

Since BigQuery stores table data in a column format, query costs are usually associated with the data type and the number of columns.

The number of rows does factor in the pricing, but reducing the number of rows will not decrease the cost. BigQuery reads all column data first before selecting specific rows.

Still, there may be instances where you only want to see specific rows of data. For example, we only want to see the top 10 events.

Let’s ask ChatGPT to show the first 10 rows of data. Copy the updated code.

After running this code in BigQuery, verify that we only get the first 10 rows.

What if we want to obtain specific rows based on certain criteria? How do we filter our query?

Filter Records

Let’s say we only want to look at specific types of events.

Looking at the event_name column, we can see the different events on our website, such as page_view, user_engagement, scroll, etc. What if we are interested in looking at only scroll event data?

Let’s open a new chat in ChatGPT. Provide the table ID and ask it to select the event_date and event_name columns and filter it by the scroll event.

Copy the code.

Run this code in BigQuery and confirm that we only get scroll events.

What if we want to look at specific date ranges?

SQL Dates

For dates, there is one slight modification we must remember.

Remove the date added to the end of the table ID and replace it with an asterisk when building your query.

In ChatGPT, copy the latest code and replace the date at the end of the table ID with an asterisk. Next, tell ChatGPT to get rows of data where the dates are between the 1st of December 2020 and the 1st of January 2021.

ChatGPT should return the code with an additional line beneath the WHERE clause indicating the dates we want to filter.

Retrieving GA4 Event Parameters

For a quick refresher, GA4 event parameters are additional data sent along with our events. If you look at the event_params field, we have repeated in the mode column, meaning that we have repeated fields.

Things become tricky when dealing with nested or repeated fields, as they are the most complex data types in BigQuery. Think of them as data within data.

If we click on the toggle, we’ll see different fields appearing. We’ll first discuss the key.

The key is a unique identifier for all our event parameters.

Looking at Preview, we can see the event_params field separated into different columns. The event parameter key identifier is in the event_params.key column.

We can have different value types for each of our keys. Toggling the value field, we can have string, integer, float, and double data types.

String values are texts, integer values are whole numbers, and float and double values are decimal numbers. All these are in different columns.

Let’s go back to the preview tab and see the columns containing the value of the event parameters. These are the columns starting with event_params.value.

Let’s look at specific examples to see how key-value pairs are stored.

We have the ga_session_number containing integer values, and the page_location containing URLs which are strings. The first is in the event_params .value.int_value column, and the latter is in the event_params .value.string_value column.

Now, how can we retrieve data from nested fields?

Unnesting Fields (Getting GA4 Event Parameters)

Let’s say we want to retrieve two columns – one with the event_name and the other with the corresponding value of the page_title key.

We’ll ask ChatGPT to look at the event_params.key column and check for the page_title. Then, return the corresponding value from the event_parans.value.string_value column.

Next, also instruct ChatGPT to return the event_name column. Then, provide the BigQuery table ID and state that the event_params field has repeating fields.

Initially, ChatGPT may return a lengthy code. We can ask it to simplify the code.

Copy the simplified code.

Let’s try this out. Alright, we have the event_name and page_title columns.

That’s how to retrieve data from nested fields.

🚨 Note: Another zero coding solution for BigQuery is the new GA4SQL tool. Check out our guide on it and learn how to use it.

Summary

We’ve seen how to use simple language to obtain data from BigQuery with ChatGPT. We learned what prompts to use with this tool to return SQL queries. We used GA4 data, but this process can apply to any dataset.

Are you interested in what other tasks ChatGPT can help with? Here are some ways how to use ChatGPT in digital marketing.

Want something more specific? Here are 10 ways to use ChatGPT with Google Tag Manager.

Have you tried ChatGPT to write SQL queries? Will you use BigQuery with your GA4 implementation? Let us know in the comments below!